Developing robust assessment in the light of Generative AI developments

.jpg)

Project summary

The Open University has been awarded just under £45,000 by the NCFE Assessment Innovation Fund to carry out research in order to develop evidence-based guidance on the strengths and weaknesses of assessment types in the light of Generative AI (GAI) tools.

Through the testing of 55 different assessments, covering a variety of assessment types and subject disciplines, the project aims to discover what are the most robust, and easiest, assessment types for learners to answer if they use the assistance of GAI. The project will also test whether the provision of a simple VLE-based training for markers on detecting AI-generated answers improves their detection abilities.

Methodology

Our research questions are:

- What are the most robust, and easiest, assessment types for learners to answer if they use the assistance of GAI?

- Can simple training improve the ability of markers to identify AI-generated assessment solutions?

The project is a second, larger-scale project to one conducted in the School of Computing and Communications (Richards et al, 2024). That project established the proof of concept that markers assessing a mixture of genuine student scripts and those generated by AI could provide evidence regarding the robustness of assessment types. It also pointed towards characteristics of AI-generated answers that could be utilised in generalised training to improve all markers' ability to detect such answers.

The project will involve the testing of around 50 assessments involving 27 different assessment types from all faculties and at all undergraduate OU levels (including access). For the selected questions, a number of answers will be generated by ChatGPT4. These will be mixed with a selection of anonymised authentic student answers selected at random from presentations prior to 22B (at which point the use of generative AI became widespread). ALs will be recruited from the relevant modules to mark the scripts. They will initially allocate a grade to half of the scripts and indicate whether they consider any were generated by AI. The markers will then carry out an online (VLE-based) training on detecting answers generated by AI. After the training, the markers will allocate a grade to the remaining half of the answers and indicate whether they consider any have been generated by AI.

The research has been approved by HREC and the staff research panel.

Outputs

Analysis of the results from approximately 950 scripts will determine which assessment types are the weakest or strongest in terms of producing answers generated by AI with high / low marks and, to some extent, whether the outcomes differ across different disciplines and levels. It will also enable the project team to determine whether a short piece of training material improves the markers’ ability to identified answers generated by AI.

The project team hopes to report by summer 2024, and to provide guidance which can be used by module teams across the OU and externally to inform future assessment design. If the training is effective, we hope to be able to make it available to all OU tutors and possibly to external institutions.

Findings

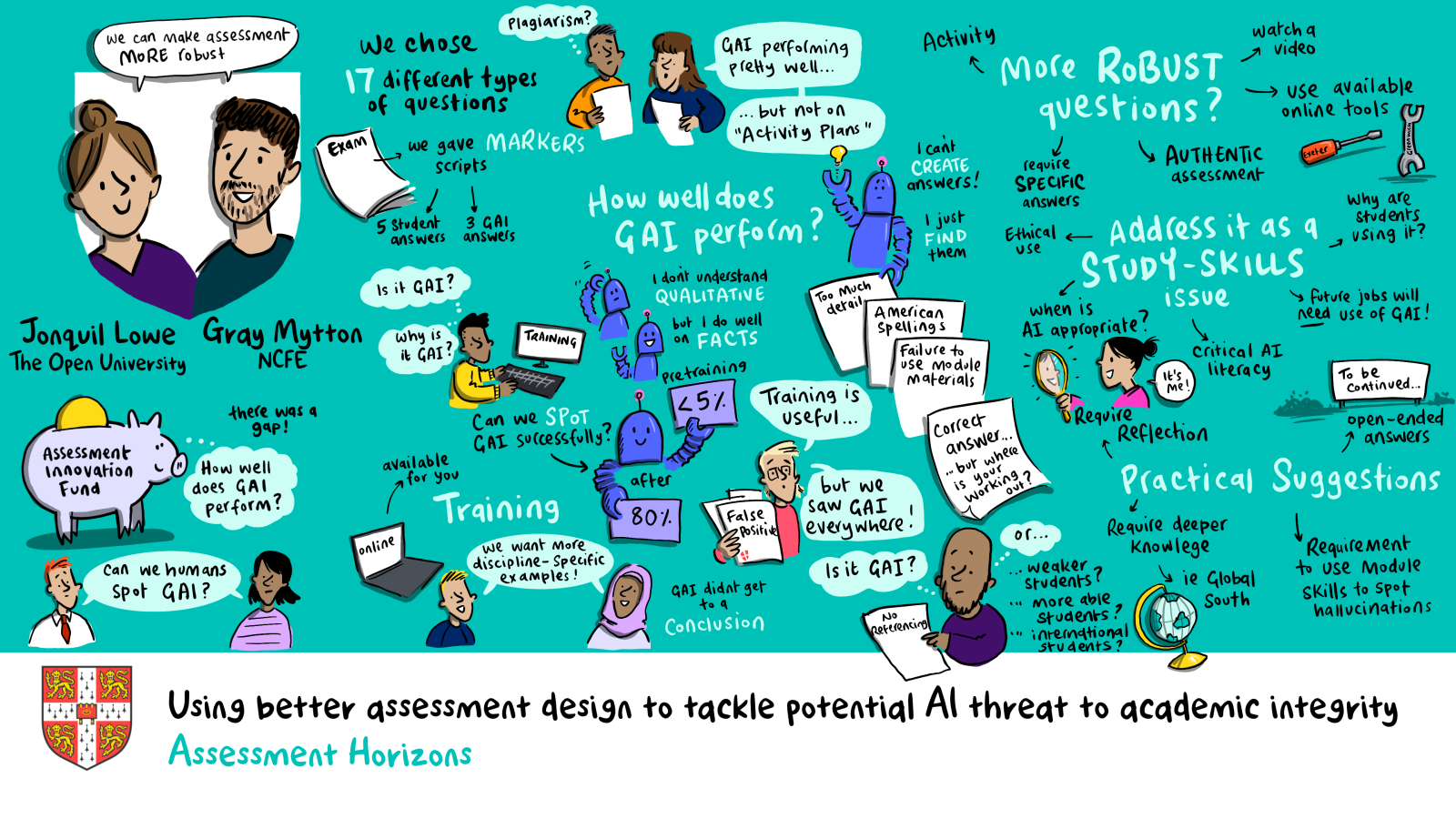

The 17 assessment types (assessed over 59 questions) in this research were generally not robust in the face of GAI; either the GAI answers performed well and achieved a passing mark, or marker training increased the number of false positives. The most robust assessment types were Audience-tailored, observation by learner and reflection on work practice, which align with what is often called ‘authentic assessment’[1], although GAI answers to these were still capable of achieving a passing grade.

Given the increase of false positives after training, focusing on detection of GAI answers may not be feasible and will require a lot of institutional capacity. The research team therefore recommended that institutions should focus on assessment design, risk assessing their questions and re-writing those where GAI answers are most likely to perform well and / or the number of false positives is likely to be high. The characteristics common in answers generated by AI (called the hallmarks of GAI) can be used to inform more robust question and marking guidance design, so that students have to complete tasks which are more difficult for GAI tools to replicate well.

Impact

This research will enable the development of guidance applicable across all further education and higher education institutions and awarding bodies to promote the use of meaningful and robust, evidence-based assessment. This will impact learners by giving institutions more confidence in continuing to use a variety of different assessment types rather than examination-only assessments, and adapting learning, teaching and assessment in the light of GAI. This will support educators to improve assessment to provide learners with assessment that is fair and inclusive.

The development of an evidence-based short piece of training to help markers detect GAI answers will assist educators in feeling more confident about the continued use of coursework.

For further information, please contact the following:

FBL: [email protected]

FASS: [email protected]

WELS: [email protected]

STEM: [email protected]

Events

How to ensure robust assessment in the light of Generative AI developments

Have you been wondering how robust your assessments are against AI? This session will report on large scale research carried out by The Open University, funded by NCFE. The research looked to identify the most and least robust assessment types to be answered by Generative AI (GAI), to enable some comparison across subject disciplines and levels, and to assess the effectiveness of a short training programme to upskill educators in recognising scripts containing AI-generated material.

The research team will share the results including the performance of GAI across a range of different assessments and the impact of training on markers. They will suggest how assessment can be made more robust in light of GAI developments and recommend how higher education institutions might adopt AI-informed approaches to learning, teaching and assessment.

This is a free online event: 25th September 13.00 to 14:00

To register: Microsoft Virtual Events Powered by Teams

References

Richards, M., Waugh, K., Slaymaker, M., Petre, M., Woodthorpe, J. and Gooch, D. (2024) ‘Bob or Bot: Exploring ChatGPT’s Answers to University Computer Science Assessment’, ACM Trans. Comput. Educ vol. 24, no. 1. DOI: 10.1145/3633287.

Funding body

Assessment Innovation Fund (NCFE)